- What A/B Testing Means in Data Science

- What Makes Data-Science-Driven A/B Testing Different from Normal Split Tests

- Why Shopify Stores Should Use Data Science Methods

- Advanced A/B Testing Models Used in Modern Data Science

- Bayesian A/B Testing (Faster Insights, Ideal for Lower-Traffic Stores)

- 8 Steps to Run a Data-Science A/B Test for Your Shopify Store

- Final thoughts

- Frequently Asked Questions

A/B testing plays a different role when viewed through a data-science lens, and that difference matters for Shopify merchants. You may change page designs, CTAs, or product images based on intuition. However, without statistical validation, those “wins” are often misleading.

This guide breaks down the data-science principles behind reliable testing and shows how to apply them to real Shopify pages without complexity or jargon. By the end, you’ll know how to test smarter, reduce risk, and make decisions grounded in real customer behavior.

What A/B Testing Means in Data Science

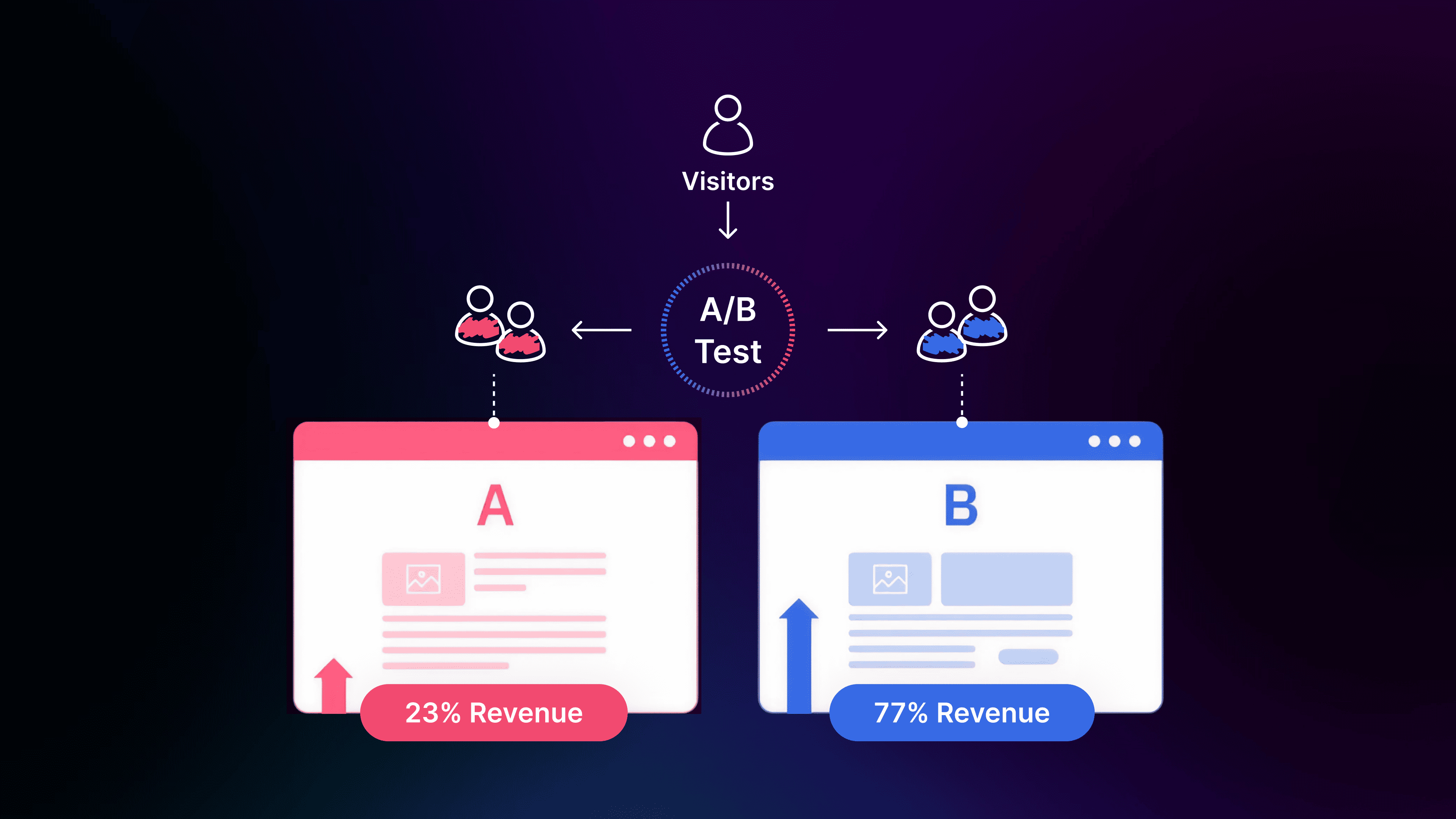

In data science, A/B testing is a controlled experiment designed to compare two versions of something, whether a product page, checkout flow, or algorithm, to determine which one creates a measurable improvement. Instead of relying on guesswork, data-science-led testing uses randomization, clean data, and statistical models to confirm whether the change actually caused the improvement.

For Shopify stores, this matters because customer behavior is often unpredictable. A layout that works for one store may underperform in another depending on audience, device type, traffic source, or market. According to Shopify’s Commerce Trends 2024 report, even small UX differences can influence conversion rates by 10–30% depending on the segment.

Conversion rate of each variant can be influenced by small changes

Actionable example:

If you’re testing a new product card design, a data-science approach ensures you’re comparing two clean, unbiased customer groups. This prevents misleading results caused by uneven traffic, time-of-day fluctuations, or promotional periods.

What Makes Data-Science-Driven A/B Testing Different from Normal Split Tests

Most marketers run A/B tests by changing one element and watching the conversion rate. But data scientists apply deeper rigor to ensure the result is truly valid. Here’s how the data-science version differs:

1. Stronger control of variables

A/B tests in data science isolate a single change and keep all other factors constant. For Shopify stores, this might mean:

- Same traffic source distribution

- Similar discounting conditions

- Consistent product stock levels

If any external factor changes, the experiment can become biased.

2. Use of statistical safeguards

Data scientists account for variance, seasonal trends, and random noise. Retail behavior fluctuates by hour, day, and campaign period—which is why running tests through high-variance periods (like BFCM) can distort results.

3. Emphasis on experiment quality, not quantity

High-quality, statistically valid tests produce fewer false decisions. McKinsey reports that companies using scientific experimentation frameworks grow revenue 2–3x faster than those relying on intuition.

High-quality experiment produces fewer false descision

Use case:

A store tests a new hero banner design. A marketer might celebrate a +16% CTR lift after 3 days, but a data scientist would check:

- Was the sample size large enough?

- Did returning users skew the numbers?

- Did mobile convert differently than desktop?

This approach prevents premature decisions and wasted development time.

Why Shopify Stores Should Use Data Science Methods

Most Shopify merchants run “quick tests” based on intuition, but very few validate whether those changes truly affected conversions. Data-science methods close this gap by giving stores a reliable, repeatable way to understand customer behavior. When competition and acquisition costs keep rising, you can no longer afford guesswork. This is where data-science-led experimentation becomes a real competitive advantage.

Better Decisions Based on Causation, Not Guessing

Many merchants confuse correlation with causation. For example, conversions might spike right after a homepage redesign, but that doesn’t mean the redesign caused it. Data-science frameworks (randomization, control groups, clean segmentation) help isolate true cause and effect.

Shopify example:

A store changes its product image size and sees a short-term conversion lift. A data-science test reveals the spike came from weekend traffic and returning customers—not the design change.

Avoid “False Winners” That Waste Budget and Time

Common reasons merchants get false results:

- Ending tests too early

- Uneven mobile vs desktop traffic

- Running tests during discount periods

- Not isolating new vs returning visitors

Data science A/B tests help you avoid “false winner” and focus on which is really convert

Data-science techniques (i.e., variance reduction, segment analysis, and clean hypothesis design) reduce the risk of investing in changes that don’t actually improve performance.

Make Testing Work Even With Low or Irregular Traffic

Many smaller Shopify stores have <5,000 sessions/month and assume A/B testing isn’t viable. But data-science models such as Bayesian inference and CUPED help extract meaningful insights faster.

Practical benefits for low-traffic stores:

- Shorter testing periods

- Clearer directional insights

- Reduced impact of random variance

- More stability even with uneven daily traffic

Protect Revenue With Guardrails and Risk Controls

Data-science methods always include guardrail metrics, secondary indicators that protect store performance while testing.

Example guardrails for Shopify merchants:

- Checkout errors

- Site speed

- Bounce rate

- Cart abandonment from mobile

If a test variant violates the guardrail (e.g., hurts mobile UX), data-science workflows catch it early before it costs revenue.

Real Insights That Improve Long-Term Business Decisions

Beyond conversion lifts, data-science testing uncovers deeper truths about customer behavior:

- Which segments respond differently

- How discount sensitivity changes by traffic source

- What messaging resonates with high-LTV buyers

- How small UX changes affect browsing depth

These insights shape product strategy, pricing, merchandising, and customer experience, not just page design.

Advanced A/B Testing Models Used in Modern Data Science

As Shopify stores mature, simple “A vs B” comparisons often aren’t enough. Traffic fluctuations, limited sample sizes, and multi-device behavior can distort basic tests. Modern data-science models help merchants extract clearer signals, speed up learning, and reduce the risk of shipping the wrong variant.

Understanding these models lets you choose the right method for your store, especially when using a platform like GemX, which supports advanced statistical evaluation natively for Shopify merchants.

Frequentist A/B Testing (Classic, Reliable, and Great for Stable Traffic)

The frequentist model is the traditional approach behind most A/B tests. It evaluates whether the difference between control and variant is statistically significant based on probability thresholds (often 95%).

Source: Statsig

Best for Shopify stores when:

-

You receive steady traffic every day

-

Your test focuses on a single variable

-

You want a clear “yes/no” decision without ongoing recalculation

-

Your store has predictable performance throughout the week

Limitations:

-

Frequentist tests require set duration

-

Merchants must avoid “peeking,” or the validity breaks

-

Doesn’t adapt to traffic volatility

Bayesian A/B Testing (Faster Insights, Ideal for Lower-Traffic Stores)

Bayesian testing evaluates probability—“How likely is variant B to be better than A?”—instead of waiting for fixed significance thresholds. It adapts as new data comes in, making it practical for Shopify stores with inconsistent or smaller traffic.

Source: Count Bayesie

Why it matters for merchants:

- Produces meaningful directional insights early

- Works well for stores with <5K daily sessions

- Handles uneven traffic patterns without breaking validity

- Helps merchants avoid running long, inconclusive tests

Shopify reports that 53% of independent merchants struggle with low or inconsistent daily traffic. Bayesian evaluation helps these stores learn meaningfully without waiting weeks.

CUPED Variance Reduction (More Accuracy, Less Testing Time)

CUPED (Controlled Experiments Using Pre-Experiment Data) is a variance-reduction method used heavily by data teams at Microsoft and Airbnb. It leverages historical user behavior to stabilize experiment results and reduce noise.

Source: Statsig

For Shopify merchants, this matters because daily store performance often fluctuates due to:

-

Paid campaign bursts

-

Influencer traffic surges

-

Weekends vs weekdays

-

New vs returning user ratio shifts

CUPED helps reduce these fluctuations so insights come faster.

Benefits for Shopify stores:

-

Shorter test duration

-

Improved precision

-

Lower exposure to losing variants

-

Better reliability when running tests with irregular traffic

Important note: GemX uses CUPED under the hood (where applicable) to improve accuracy in low-variance Shopify experiments, especially template or multipage tests that touch multiple user journeys.

Sequential Testing (Smarter Stopping Without Compromising Validity)

Sequential testing allows merchants to check performance at multiple points without breaking the statistical validity of the test—unlike frequentist peeking.

When to use sequential testing:

-

You want to stop early if a clear loser emerges

-

You’re testing something risky (e.g., checkout changes)

-

Your traffic volume varies, and you need flexible timing

-

You want to protect buyers from prolonged exposure to a bad variant

-

Multi-Armed Bandits (Real-Time Optimization for Campaigns and Fast Decisions)

Multi-armed bandits automatically shift traffic toward the best-performing variant while the test runs. This isn’t a replacement for traditional A/B testing but rather a complementary method for time-sensitive scenarios.

Best for merchants when:

-

Running flash sales or seasonal campaigns

-

Testing homepage hero messaging during a promotion

-

Optimizing announcements or banners

Create flash sale banner

Statista notes that promotional periods spike e-commerce traffic by up to 120% on peak days. During such events, bandit algorithms maximize performance instead of waiting weeks for statistical significance.

Uplift Modeling & Causal Inference (Advanced: For Stores Scaling into Personalization)

While not every merchant needs these methods today, it’s useful to understand where the industry is heading. Uplift modeling predicts not just whether a variant works, but for whom it works best. Causal inference isolates deeper behaviors beyond surface-level metrics.

Source: AI Gopubby

Useful when merchants scale to:

-

Personalized PDPs

-

Targeted product recommendations

-

Audience-driven messaging

-

Cohort-level retention improvements

For Shopify Plus stores running personalization or audience-based merchandising, uplift modeling becomes a powerful differentiation tool.

Important note: While uplift modeling is not yet a standard Shopify feature, GemX’s segmentation insights and Path Analysis give merchants a foundational understanding of cohort behaviors.

8 Steps to Run a Data-Science A/B Test for Your Shopify Store

Running an A/B test is easy; running a test you can trust is the real challenge. Many Shopify merchants change elements on their store without a framework, leading to misleading results or wasted energy. A scientific process supported by A/B testing tools like GemX can ensure every test produces reliable, revenue-driving insight.

Step 1: Define Your Hypothesis & Expected Outcome

Every valid experiment starts with a precise hypothesis. Instead of “Let’s try a new banner,” a clear hypothesis describes what will change, who it affects, and how improvement will be measured.

Use the PICOT structure to keep your hypothesis grounded in data:

- Population: target audience segment

- Intervention: the change you’re testing

- Comparison: control vs variant

- Outcome: the metric you expect to influence

- Time: how long you’ll collect data

Shopify example:

|

“For first-time visitors (P), placing review stars above the product title (I) compared to below the fold (C) will increase add-to-cart rate (O) over a 14-day period (T)”. |

Step 2: Select the Metrics That Matter Most

Your experiment’s success depends on choosing the right metrics. GemX helps merchants structure these metrics clearly by requiring a primary KPI (e.g., conversion rate, ATC rate) and allowing guardrail metrics to ensure no harm is done during testing.

Choosing the winning metric of your experiment

Typical primary metrics for Shopify stores:

- Add-to-cart rate

- Product page conversion rate

- Click-through rate

- Revenue per visitor

Helpful guardrail metrics:

- Mobile bounce rate

- Checkout friction indicators

- Page load performance

- Cart abandonment

Baymard’s research shows that UX friction contributes to almost 70% of abandoned checkouts, illustrating why guardrails are critical for safe optimization.

Step 3: Segment Your Audience Properly

A/B tests quickly lose validity if segments don’t behave similarly. Differences in new vs returning traffic, device distribution, or ad vs organic sessions all influence outcomes.

Segment your target audience before launching your experiment

Segments to consider carefully:

- New vs returning users: very different intent patterns

- Mobile vs desktop: Shopify reports 70–80% mobile traffic across most verticals

- Paid vs organic traffic: paid traffic often has higher short-term intent

Step 4: Set Sample Size and Testing Duration

Stopping a test early is the fastest way to get the wrong answer. A scientifically valid A/B test needs enough traffic and a long enough timeframe to smooth out natural behavior fluctuations.

General guidelines for Shopify stores:

- Run most tests at least 14 days

- Include both high- and low-intent days

- Avoid running tests during major sales unless testing seasonal behavior

- Use a sample-size calculator to determine requirements

Step 5: Design Your Variants With UX Principles

Your variant should isolate one meaningful change, not multiple tweaks at once. Strong variants follow clear UX logic aligned with the hypothesis.

Design your variant template

Variant ideas for Shopify merchants:

- Reduce clutter on mobile PDPs

- Add trust builders like reviews or badges

- Adjust CTA hierarchy

- Change price anchoring or bundle layout

- Prioritize high-performing imagery above the fold

With GemX: CRO & A/B Testing, Shopify merchants can build clean variants without damaging existing live pages, ensuring controlled and low-risk experimentation.

Step 6: Launch Your Experiment With Proper Tracking Setup

Before launching, verify that tracking is accurate. Even small discrepancies introduce bias.

Pre-launch checklist:

- Ensure add-to-cart events fire consistently

- Validate that control and variant receive balanced traffic

- Verify product availability and pricing are identical

- Check mobile speed and interaction metrics

- Confirm UTMs and analytics tools receive correct signals

Step 7: Monitor the Test Without Interfering With It

Once the test begins, resist the urge to tweak or “optimize” midway. Even minor adjustments can invalidate the test entirely.

Instead, monitor for anomalies:

- Sudden traffic spikes

- Tracking errors

- Device ratio imbalance

- Promo codes affecting only one variant

Step 8: Analyze Results and Document Learnings

When your test reaches statistical validity, analyze the results across multiple layers, not just the top-level metric.

View and read your experiment report

A thorough interpretation includes:

- Primary metric outcomes

- Guardrail check

- Segment-level insights

- Effect size and confidence level

- Operational effort required to deploy

- A decision: Ship, iterate, or hold

GemX Experiment Analytics simplifies this by showing significance levels, segment performance, and funnel drop-off patterns, giving merchants a more complete understanding of user behavior.

Losing tests matters just as much as winning ones. The “failed” tests could reveal critical insights about messaging, layout, or audience preferences.

Learn more: How to View and Read Your Experiment Results

Final thoughts

Not just a growth tactic, strong experimentation is how Shopify merchants learn what truly improves their customer experience. By understanding the mechanics behind testing, applying data-science methods, and using tools that protect data quality, store owners can make decisions with far more clarity and confidence. As you adopt these practices, you’ll see how a/b testing for data science turns everyday store changes into measurable, repeatable wins.

Keep exploring new ideas, stay curious about your customers, and continue building smarter pages and experiments with resources from GemX.

Frequently Asked Questions

1. What is A/B testing in data science?

A/B testing in data science is a controlled experiment that compares two versions of a page, element, or experience to see which performs better. It relies on statistical methods to confirm whether the improvement is meaningful rather than random, helping Shopify merchants make confident, data-backed decisions.

2. How does A/B testing for data science apply to Shopify stores?

For Shopify merchants, it helps validate whether layout tweaks, pricing formats, images, or messaging actually improve conversions. Data-science methods—like segmentation, significance testing, and variance reduction—ensure the results reflect real customer behavior, not temporary spikes or biased traffic patterns.

3. Do I need a large amount of traffic to run data-science A/B tests?

Not always. While classic frequentist tests work best with consistent traffic, Shopify stores can use Bayesian models or CUPED variance reduction to learn from smaller datasets. These approaches help low-traffic merchants get reliable directional insights without running tests for weeks.

4. How long should a scientifically valid A/B test run?

Most Shopify stores should run tests for 14–21 days to capture weekday and weekend patterns. The exact duration depends on traffic volume, expected effect size, and statistical method. Ending tests too early risks false results, especially during fluctuating periods like promotions or ad spikes.

5. What are the biggest mistakes merchants make when A/B testing?

Common issues include testing too many variables at once, stopping early, ignoring mobile behavior, running tests during sales periods, or using unbalanced traffic sources. Shopify stores also often misinterpret random fluctuations as real wins, something data science models help prevent.

6. What tools help run data-science-level A/B tests on Shopify?

Merchants can use platforms designed for Shopify, such as GemX, which supports accurate tracking, segment analysis, anomaly detection, and advanced statistical methods. These features help ensure A/B test outcomes are valid, reproducible, and directly tied to store performance.