A/B testing is often seen as a reliable way to improve conversion rates and make data-driven decisions. In reality, many teams run test after test and still walk away with unclear results, or worse, confident conclusions based on misleading data.

The problem usually isn’t the idea being tested. It’s how the test is designed, run, or interpreted. Without the right experimentation mindset, even simple A/B tests can fail to produce insights that actually move the business forward. Before diving into specific A/B testing mistakes, it’s important to understand what A/B testing is really meant to do.

How to Think About A/B Testing (Before Fixing Mistakes)

A/B testing isn’t about proving that a new idea is better. It’s about learning how users behave when a single variable changes under controlled conditions.

At its core, a well-designed A/B test answers one narrow question: Does this specific change influence a specific user action?

Problems start when teams treat A/B testing as a shortcut to quick wins. Tests are launched without clear boundaries, multiple assumptions are bundled together, or results are expected to explain more than the experiment was designed to measure. When that happens, outcomes feel random: tests “fail,” winners don’t replicate, and confidence in experimentation erodes.

With that foundation in place, we can now look at the most common A/B testing mistakes teams make at each stage of an experiment and how to avoid them.

Pre-Testing A/B Testing Mistakes

Most A/B testing problems don’t start when a test is running. They start much earlier, during the planning phase. Decisions made before an experiment goes live often determine whether the results will be useful or misleading.

At this stage, teams usually feel confident. The idea sounds reasonable, the page gets traffic, and the test setup looks simple enough. That confidence is exactly what makes pre-testing mistakes easy to miss.

Mistake 1: Starting a Test Without a Clear Hypothesis

Many teams launch A/B tests based on intuition rather than a defined hypothesis. The test exists, but the question it is supposed to answer remains vague.

When a hypothesis is missing, the test has no clear success condition. As a result, teams often focus on whatever metric looks interesting after the fact, rather than the metric that actually matters.

A solid hypothesis does not need to be complex, but it does need to be specific. It should clearly connect three elements:

-

The change being introduced

-

The expected impact on user behavior

-

The metric used to evaluate that impact

Without this structure, test results tend to feel inconclusive, even when one variant appears to outperform the other.

Mistake 2: Choosing the Wrong Primary Metric

A common planning error is selecting a metric that does not match the goal of the test. Click-through rate, time on page, or engagement often look attractive because they move quickly, but they do not always reflect meaningful business outcomes.

This mismatch creates confusion later. A test may look successful on the surface while failing to improve conversions, revenue, or funnel progression.

Before running a test, the primary metric should answer one question clearly:

If this number improves, does the business actually benefit?

Secondary metrics can still be monitored, but they should not compete with the main success signal of the experiment.

Mistake 3: Testing Pages That Don’t Drive Impact

Not every page is a good candidate for A/B testing. Pages with low traffic or weak connections to conversion goals rarely provide enough data or insight to justify the effort.

This mistake often happens when teams test what feels visible rather than what matters most. Informational pages, low-traffic landing pages, or secondary content can consume time without producing meaningful learnings.

Before committing to a test, it helps to ask:

-

Does this page influence a key step in the conversion funnel?

-

Is there enough traffic to reach a reliable result?

If the answer to either question is unclear, the test may not be worth running yet.

Mistake 4: Ignoring Traffic Reality and Sample Size

A/B testing requires patience and volume. Planning a test without accounting for traffic levels often leads to experiments that run for weeks without reaching reliable conclusions.

Low traffic does not mean testing is impossible, but it does require adjusted expectations. In some cases, qualitative insights or funnel analysis provide more value than a formal split test.

A realistic plan considers:

-

Current traffic and conversion rates

-

The minimum sample size needed for confidence

-

How long the test is expected to run

Without this context, teams often stop tests too early or misinterpret early fluctuations as meaningful trends.

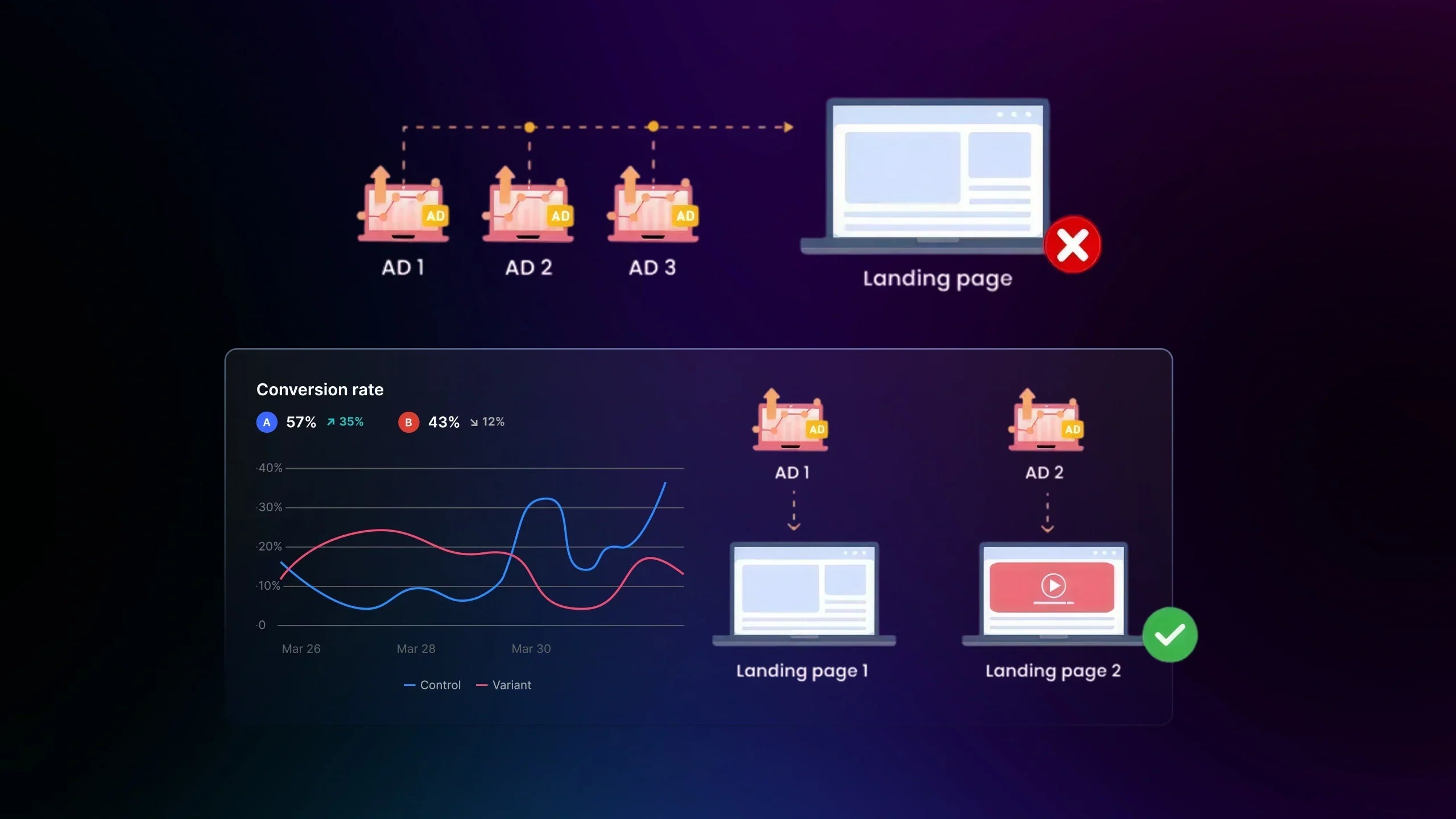

Mistake 5: Bundling Multiple Assumptions Into One Test

Another frequent pre-testing mistake is trying to validate too many assumptions at once. A single variant ends up changing layout, messaging, visuals, and calls to action simultaneously.

While this approach feels efficient, it reduces clarity. When performance changes, the reason remains unclear. The test may produce a result, but it does not produce insight.

Strong A/B tests focus on isolating one core assumption at a time. This makes outcomes easier to interpret and learnings easier to reuse in future experiments.

Mid-Testing A/B Testing Mistakes

Once an A/B test is live, most issues no longer come from strategy. They come from execution. On Shopify, this stage is where small technical or operational details quietly distort results.

At a glance, everything may look fine. Traffic is flowing, variants are visible, and numbers are updating in the dashboard. Under the surface, however, many tests are already compromised by factors that are easy to overlook in a real store environment.

Mistake 6: Ending the Test Too Early

One of the most common execution mistakes is stopping a test as soon as one variant appears to be winning. Early spikes in conversion rate often look convincing, especially during the first few days.

On Shopify stores, this is even more misleading. Daily traffic patterns, marketing campaigns, and returning customers can create short-term fluctuations that do not hold over time.

A test should run long enough to capture:

-

Weekday and weekend behavior

-

Repeat visitors and new sessions

-

Normal traffic volatility

Without sufficient duration, early results often reflect timing rather than true performance.

Mistake 7: Uneven or Unverified Traffic Splitting

Many teams assume traffic is split correctly once a test is launched. In practice, uneven traffic allocation is a frequent problem, especially when multiple apps or theme customizations are involved.

On Shopify, issues like caching, script conflicts, or page-specific logic can cause one variant to receive more exposure than expected. When this happens, conversion differences become unreliable.

During a live test, it is important to:

-

Verify that traffic is being distributed as planned

-

Monitor early data for obvious imbalance

-

Confirm variants load consistently across sessions

Ignoring this step can invalidate results before the test reaches meaningful volume.

Mistake 8: Running Tests During Abnormal Store Activity

Sales events, promotions, product launches, or paid traffic spikes can heavily influence user behavior. Running A/B tests during these periods often produces results that cannot be generalized.

For Shopify merchants, this risk is especially high around discount campaigns or seasonal peaks. Conversion behavior during these periods does not reflect normal purchasing intent.

Tests perform best when:

-

Traffic sources are stable

-

Pricing and promotions remain consistent

-

User intent is predictable

If conditions change mid-test, results should be interpreted with caution, or the experiment should be paused.

Mistake 9: Overlapping Experiments on the Same Pages

Running multiple tests on the same page at the same time is a common execution mistake. This often happens when different teams test different ideas without coordination.

On Shopify, overlapping tests can interfere with each other at the theme or script level. Variants may combine in unexpected ways, making it impossible to isolate the impact of a single change.

To avoid this, tests should be scoped clearly by:

-

Page or template

-

Audience segment

-

Test timeframe

Clear boundaries help preserve data integrity and reduce noise in results.

Mistake 10: Ignoring Mobile Experience During the Test

Mobile traffic accounts for the majority of sessions on most Shopify stores. Despite this, many tests are still reviewed primarily from a desktop perspective.

Small layout issues, loading delays, or interaction problems on mobile can significantly affect conversion rates. These issues often go unnoticed if mobile performance is not checked during the test.

While a test is running, it helps to:

-

Review variants on multiple devices

-

Compare mobile and desktop performance separately

-

Watch for abnormal drop-offs in mobile conversion

Ignoring mobile behavior can turn a promising test into a misleading one.

GemX helps Shopify merchants monitor traffic distribution, device-level performance, and variant exposure in real time, making it easier to detect execution issues before they distort results.

Post-Testing A/B Testing Mistakes

When an A/B test ends, many teams assume the hard part is over. In reality, this is the stage where the most damaging mistakes often happen. On Shopify, post-testing decisions tend to move fast, especially when a variant appears to “win.”

The risk here is not missing data. It is over-trusting it.

Mistake 11: Treating Small Lifts as Meaningful Wins

A slight increase in conversion rate can feel like a success, particularly when a test has been running for weeks. However, small lifts are not always reliable, especially when traffic volume is limited or uneven.

On Shopify stores, minor changes in traffic source mix, returning customer behavior, or product availability can easily create short-term improvements that do not repeat after rollout.

Before acting on a result, it helps to ask:

-

Does this lift hold across multiple days and traffic segments?

-

Is the improvement large enough to matter at scale?

Not every statistical improvement is a business win.

Mistake 12: Ignoring Secondary Impact on Revenue and Funnel Quality

Conversion rate is rarely the whole story. A variant that increases add-to-cart or checkout completion may still reduce average order value or overall revenue.

This happens frequently when design changes simplify the page too aggressively or remove information that helps customers make higher-value decisions.

After a test ends, results should be reviewed through more than one lens:

-

Conversion rate

-

Revenue per session

-

Downstream behavior in the funnel

Without this broader view, teams risk optimizing for volume while hurting overall performance.

Mistake 13: Declaring a Winner and Moving On Too Quickly

Many tests end with a simple conclusion. Variant B won. Variant A lost. The change gets applied, and the team moves on to the next idea.

This approach wastes learning.

Every A/B test contains insights beyond the winner. Patterns in user behavior, segment differences, and unexpected outcomes often point to new opportunities that never get explored.

Post-test analysis works best when teams pause to document:

-

What changed and why it mattered

-

Where the impact was strongest or weakest

-

What assumptions were proven or challenged

Without this step, teams often repeat similar tests without realizing it.

Mistake 14: Applying Test Results Too Broadly

A/B tests answer very specific questions. Problems arise when those answers are applied across the entire store without context.

For example, a winning change on a product page does not automatically belong on every product. A successful layout on a desktop does not guarantee the same result on mobile. Shopify stores often have diverse audiences and product categories that behave differently.

Before rolling out changes widely, it helps to consider:

-

Whether the test audience matches the broader store audience

-

Whether the result depends on product type or traffic source

-

Whether follow-up tests are needed before full rollout

Careful expansion reduces the risk of unintended consequences.

Mistake 15: Treating “No Winner” as a Failed Test

Not every A/B test produces a clear winner. This outcome is often seen as wasted effort, but it should not be.

On Shopify, neutral results frequently indicate that the tested change does not address the real conversion barrier. That insight alone can save time and prevent larger mistakes.

A test without a winner still provides value when it:

-

Eliminates weak assumptions

-

Narrows the problem space

-

Informs better hypotheses for future tests

When “no result” is documented and understood, it becomes part of a stronger experimentation process.

Post-test decisions become more reliable when experiment results are reviewed alongside revenue-level and funnel-level context, rather than conversion rate alone.

With GemX, experiment results can be analyzed at both page and order levels, helping teams understand not just which variant converted, but how it affected revenue and funnel quality.

A/B Testing Best Practices Checklist

After seeing where A/B tests commonly go wrong, it helps to step back and lock in a simple set of principles. This checklist is not meant to make testing more complex. It exists to keep experiments clean, interpretable, and worth the effort, especially in a Shopify environment where traffic, themes, and apps constantly interact.

Before launching a test, make sure the foundation is solid:

-

The test starts with a clear hypothesis tied to a single change and a single primary metric.

-

The tested page plays a meaningful role in the conversion funnel and receives enough traffic to support a reliable result.

-

The chosen metric reflects real business impact, not just surface-level engagement.

Once the test is live, execution discipline matters more than speed:

-

Traffic is split as planned and verified early, rather than assumed to be correct.

-

The test runs long enough to capture normal shopping behavior, including weekdays, weekends, and repeat visits.

-

No overlapping experiments interfere with the same page, audience, or template.

-

Mobile experience is reviewed regularly, not only at launch.

When the test ends, decisions should be deliberate, not rushed:

-

Results are reviewed across key segments such as device type, traffic source, and user type.

-

Secondary metrics like revenue per session and average order value are checked alongside conversion rate.

-

Learnings are documented clearly, including unexpected outcomes and failed assumptions.

-

Rollouts are scoped carefully, with follow-up tests planned when needed.

This checklist does not guarantee winning tests. What it does guarantee is that every experiment produces reliable signals, even when the outcome is neutral. Over time, that consistency matters more than chasing quick wins.

Conclusion

A/B testing works best when it is treated as a learning system, not a shortcut to quick wins. For Shopify merchants and eCommerce teams, disciplined experimentation helps protect revenue, reduce guesswork, and turn everyday traffic into reliable insights that compound over time. Understanding where experiments break down makes it easier to avoid costly A/B testing mistakes and build tests that actually inform better decisions.

To keep improving your testing process, continue exploring practical guides and real experiment examples with GemX.